Introduction to Unit Testing

So, what exactly is Unit Testing?

When we need to develop code, we first determine the inputs our code will receive and the outputs it should produce. Then, we write the required logic to process these inputs into the desired outputs.

A unit test is essentially a description of these inputs and the expected outputs. By writing unit tests, we can verify that the piece of code we are building fulfills these expectations. It’s like setting up mini-experiments to ensure each part of our program behaves correctly.

Unit testing ensures our code is reliable and maintainable, providing a solid foundation for our software projects. It’s like having a safety net that catches errors before they cause trouble, giving us peace of mind and making our coding adventures much smoother!

Music Intro

- Introduction to Unit Testing

- Music Intro

- Needed tools

- How to learn a test framework?

- Test 1: to make it pass

- Other languages and test frameworks

- Test 2: to make it fail

- Test 3: to make it crash (neither pass or fail)

- 🏋️ Exercise

- Test 4: Let’s automate the passed, failed, and crashed test

- 🏋️ Exercise

- Data driven testing

- 🏋️ Exercise

- Making unit-tests understandable

- 🏋️ Exercise

- Skipping tests

- 🏋️ Exercise

- Reporting

- Conclusion

Needed tools

With Python we can use PyTest or RobotFramework.

With Groovy we can use Spock.

With JavaScript we can use Mocha and Chai.

With C# I have no idea, but ChatGPT suggest: NUnit and xUnit.

The test framework is not that important, the most important part is to use it wisely.

Often it is better to use a simple test-framework and add new features others have developed or develop them yourself.

How to learn a test framework?

Testing is the basis for knowledge and one of the best ways to learn something.

We simply make small tests (experiments) to see what, what succeeds, what fails, and what can be learned from it.

In the next steps I will give you some of these tests, so you can learn the test-framework you want in any coding language you want.

We will take it one test case at a time, just like we would develop with Test Driven Development :-)

I will first show the first unit-test in Python with pytest, and then show how AI can translate it to JavaScript with Mocha and Chai, then into Groovy and Spock.

Test 1: to make it pass

The first test we are going to make is to make it pass.

We need to install the framework

pip install pytestand make a test.py file:

import pytest

def test_mustPass():

assert 1+2==3, "this test must pass, but it passed :("To run it we need to:

pytest test.pyWhich will give the following result:

test.py . [100%]

========================== 1 passed in 0.00s =============================Important: the method name needs to a prefix: text_

otherwise pytest will not run this method as a test.

Other languages and test frameworks

With a simple AI prompt:

Can you translate the following code into Javascript with Mocha and Chai?

(please use suite, test, and assert, instead of describe, it, and expect)

"""python

import pytest

def test_mustPass():

assert 1+2==3, "this test must pass, but it passed :("

"""const assert = require('chai').assert;

suite('Simple Addition Tests', function() {

test('mustPass', function() {

assert.equal(1 + 2, 3, "this test must pass, but it passed :(");

});

});Same can be done for Groovy with Spock:

import spock.lang.Specification

class SimpleAdditionSpec extends Specification {

def "mustPass"() {

expect:

1 + 2 == 3

}

}Test 2: to make it fail

The second test needs to fail, so we can see how it fails!

So, we add another test case:

import pytest

def test_mustPass():

assert 1+2==3, "this test must pass, but it passed :("

def test_mustFail():

assert 1+2==4, "this test must fail, and it did :)"Which will give the following result:

====================== short test summary info ===========================

FAILED test.py::test_mustFail - AssertionError: this test must fail, and it did :)

===================== 1 failed, 1 passed in 0.01s ========================This is of course a manual test.

Test 3: to make it crash (neither pass or fail)

The 3rd test needs to cast an exception, so it can’t complete.

import pytest

def test_mustPass():

assert 1+2==3, "this test must pass, but it passed :("

def test_mustFail():

assert 1+2==4, "this test must fail, and it did :)"

def test_mustCrash():

raise RuntimeError("CRASH! ;-)")

assert 1+2==3, "this must crash, but it failed :("Which will give the following result:

====================== short test summary info ===========================

FAILED test.py::test_mustFail - AssertionError: this test must fail, and it did :)

FAILED test.py::test_mustCrash - RuntimeError: exception

===================== 2 failed, 1 passed in 0.01s ========================Pytest don’t show much of a difference between these two fails, but one is an AssertionError (test failed) and the other one RuntimeError (test not completed / crashed / stopped). Some frameworks give these a different colors / icon like

✔️ passed

❌ failed

⚠️ not completed / crashed / stopped

🏋️ Exercise

Take the programming language of your choice.

Select a test framework for it (ask AI like ChatGPT about it, if you don’t know any).

Make 3 test cases one that passes, one that fails, and one that crashes.

Be inspired by

Test 4: Let’s automate the passed, failed, and crashed test

Testing how something works is a great way to learn.

We test how things work, and how they fail so we can recover better from the fails.

To remember or share our knowledge, we can write it down as documentation.

A great way is to do documentation as unit-tests, because we can run them.

When all the unit-tests passes, then the documentation is up-to-date.

When a unit-test fails/crashes, then the documentation needs to be updated.

This is called live-documentation, because the documentation is alive and evolving with the system under development.

Let’s try with the 1st test (pass):

In order to automate our test cases, we first need to rename the prefix test_, so pytest won’t run them automatically.

import pytest

def toBeTested_mustPass():

assert 1+2==3, "this test must pass, but it passed :("Then we can add a new unit-test that we want pytest to run, so it must start with test_ prefix:

import pytest

def toBeTested_mustPass():

assert 1+2==3, "this test must pass, but it passed :("

def test_toBeTested_mustPass():

toBeTested_must_pass()

assert TrueThis will of course pass:

test.py . [100%]

========================== 1 passed in 0.00s =============================We write assert True in the end, because if the toBeTested_must_pass would fail, then the test would stop there.

Let’s try to to make it fail, by replacing toBeTested_mustPass with toBeTested_mustFail and experience what happens:

import pytest

def toBeTested_mustFail():

assert 1+2==4, "this test must fail, and it did :)"

def test_toBeTested_mustPass():

toBeTested_mustFail() # we changed this line from pass to fail

assert True

====================== short test summary info ===========================

FAILED test.py::test_toBeTested_mustPass - AssertionError: this test must fail, and it did :)

========================== 1 failed in 0.01s =============================Let’s try to contain the 2nd test (fail):

We need to use try and except (in other languages it is called try and catch):

import pytest

def toBeTested_mustFail():

assert 1+2==4, "this test must fail, and it did :)"

def test_toBeTested_mustFail():

# Given

errorMessage = None

# When

try:

toBeTested_must_fail()

except AssertionError as e:

errorMessage = str(e)

# Then

assert errorMessage == "this test must fail, and it did :)\nassert (1 + 2) == 4"

test.py . [100%]

========================== 1 passed in 0.00s =============================Many test frameworks contain error and exception handlers that can be used instead:

import pytest

def toBeTested_mustFail():

assert 1+2==4, "this test must fail, and it did :)"

def test_toBeTested_mustFail():

with pytest.raises(AssertionError, match=r"this test must fail, and it did :\)\nassert \(1 \+ 2\) == 4"):

toBeTested_must_fail()But I really dislike it, because the readability is horrible.

I often set this rule: when code can’t be split into given when then parts, then the readability becomes harder. It is like with regular language:

When the function is called and expected to fail with an AssertionError

Then the error message must match the expected messageThe function is called and expected to fail with an AssertionError, and the exception message should match the expected regular expressionBoth are readable, but I will let you decide which one is easier to read.

I know that some programmers will disagree with me and that is fine.

We all have preferences and different contexts to work in.

Let’s try to contain the 3nd test (crash):

It is almost the same, except that we try to contain the RuntimeError instead of the AssertionError

def toBeTested_mustCrash():

raise RuntimeError("CRASH! ;-)")

assert 1+2==3, "this must crash, but it failed :("

def test_toBeTested_mustCrash():

# given

errorMessage = None

# when

try:

toBeTested_must_crash()

except RuntimeError as e:

errorMessage = str(e)

# then

assert errorMessage == "CRASH! ;-)"test.py . [100%]

========================== 1 passed in 0.00s =============================It’s very straight forward.

🏋️ Exercise

Make a unit test for each of your 3 test cases that passed, failed and crashed.

Data driven testing

Sometimes it is better to have a single test that is data driven, than to have multiple tests.

The balance between them is the readability of the test.

Let’s try to make the 3 first test (pass, fail, crash) data driven.

The original tests looked like this:

import pytest

def test_mustPass():

assert 1+2==3, "this test must pass, but it passed :("

def test_mustFail():

assert 1+2==4, "this test must fail, and it did :)"

def test__mustCrash():

raise RuntimeError("CRASH! ;-)")

assert 1+2==3, "this must crash, but it failed :("Which can be made into:

import pytest

from dataclasses import dataclass, field

@dataclass

class TestCase:

name: str

input: int

expected: int

raisesException: bool

testCases = [

TestCase(name="1 must_pass", input=1+2, expected=3, raisesException=False),

TestCase(name="2 must_fail", input=1+2, expected=4, raisesException=False),

TestCase(name="3 must_crash", input=1+2, expected=4, raisesException=True),

]

ids = [testCase.name for testCase in testCases]

params = [testCase for testCase in testCases]

@pytest.mark.parametrize("testData", params, ids=ids)

def test_cases(testData:TestCase):

# when

if testData.raisesException:

raise RuntimeError("CRASH! ;-)")

else:

assert testData.input==testData.expected, "this test must fail, and it did :)"

====================== short test summary info ===========================

FAILED test.py::test_cases[2 must_fail] - AssertionError: this test must fail, and it did :)

FAILED test.py::test_cases[3 must_crash] - RuntimeError: CRASH! ;-)

===================== 2 failed, 1 passed in 0.02s ========================I like to use something called @dataclass that we can build test cases from and is supported by autocomplete!

...

from dataclasses import dataclass, field

@dataclass

class TestCase:

name: str

input: int

expected: int

raisesException: bool

...Then I can define my test cases as a list of TestCase objects:

...

testCases = [

TestCase(name="1 must_pass", input=1+2, expected=3, raisesException=False),

TestCase(name="2 must_fail", input=1+2, expected=4, raisesException=False),

TestCase(name="3 must_crash", input=1+2, expected=4, raisesException=True),

]

...

Then it transforms the testCases into something pytest understands:

...

ids = [testCase.name for testCase in testCases]

params = [testCase for testCase in testCases]

@pytest.mark.parametrize("testData", params, ids=ids)

def test_cases(testData:TestCase):

if testData.raisesException:

raise RuntimeError("CRASH! ;-)")

else:

assert testData.input==testData.expected, "this test must fail, and it did :)"🏋️ Exercise

Make you 3 original test cases (that passed, failed, and crashed) to be data driven, so you can experience how a data driven test does all 3 things. (some test frameworks stops at the first fail, which is not good).

Making unit-tests understandable

It is really important to try to form the test cases, so it is easy to understand them.

A test case that is not understandable is valueless

and we would be better without it.

If we take the example from previous chapter:

import pytest

from dataclasses import dataclass, field

@dataclass

class TestCase:

name: str

input: int

expected: int

raisesException: bool

testCases = [

TestCase(name="1 must_pass", input=1+2, expected=3, raisesException=False),

TestCase(name="2 must_fail", input=1+2, expected=4, raisesException=False),

TestCase(name="3 must_crash", input=1+2, expected=4, raisesException=True),

]

ids = [testCase.name for testCase in testCases]

params = [testCase for testCase in testCases]

@pytest.mark.parametrize("testData", params, ids=ids)

def test_cases(testData:TestCase):

# when

if testData.raisesException:

raise RuntimeError("CRASH! ;-)")

else:

assert testData.input==testData.expected, "this test must fail, and it did :)"

Then it is longer than the following example:

import pytest

@pytest.mark.parametrize(

"i, e, rte", [

(1+2, 3, False), # this test must pass

(1+2, 4, False), # this test must fail

(1+2, 3, True) # this test must crash

],

ids=["must_pass", "must_fail", "must_crash"]

)

def test_cases(i, e, rte):

if rte:

raise RuntimeError("CRASH! ;-)")

else:

assert i==e, "this test must fail, and it did :)"Except, it is much harder to understand and maintain:

- What does i, e, and rte mean?

- the ids and the comments needs to be paired and updated manually.

- The parameters can figured out, but with 7+ parameters, different value lengths, and 10+ test cases would make this hell to maintain!

So, please use:

- Readable parameter names like: number1 + number2 == result and not n1+n2==r

- Use group parameters into Inputs and Expected, so it is easy to understand what transformation needs to be done (not necessary how it is done) (In Python we can use @dataclasses

- Use test id’s/description to easier navigate which tests has failed.

- Try to create the context the test case needs to work within. Then it will be easier to understand, why something works the way it works.

- Additionally use Skipped/Ignored category in case you want a test case skipped (described in Skipping tests)

🏋️ Exercise

Go through your previous exercises and evaluate if they are understandable – if not, then please improve them.

Skipping tests

Sometimes we can find a bug, and a test will fail.

There is a dangerous question to ask, that many have opinions about.

Imagine a tests starts to fail, because of a bug. What should we do?

- Fix the bug as soon as possible!

- Let the test fail, until it is fixed.?

- Mark the test with @Ignore and a Jira-bug, so we can fix it soon.

I have tried multiple approaches and all of them has a price.

- When it is not possible to fix all bugs, then we fix only the most critical ones.

- When we are not in production yet, then a bug might be critical, but not urgent.

- When we see a red test report, then we can get used to the red color. Non-critical bugs can make it impossible to see the critical ones.

So, a @Ignore of @skip function can be a good thing, as long as we remember to give it a comment or a link to a jira-story/bug.

In Pytest we can skip tests with:

import pytest

def test_mustPass():

assert 1+2==3, "this test must pass, but it passed :("

@pytest.mark.skip(reason="Jira: bug-101")

def test_mustFail():

assert 1+2==4, "this test must fail, and it did :)"

@pytest.mark.skip(reason="Jira: bug-102")

def test_mustCrash():

raise RuntimeError("CRASH! ;-)")

assert 1+2==3, "this must crash, but it failed :("test.py . [100%]

===================== 1 passed, 2 skipped in 0.01s =======================Then we can always read about the bug and status in the Jira bug.

A data driven test can be skipped a little differently, by

adding a property to the TestCase called skip (line 10),

assign it to each TestCase (line 13-15)

and then add the skip option to the implementation (line 23-24):

import pytest

from dataclasses import dataclass, field

@dataclass

class TestCase:

name: str

input: int

expected: int

raisesException: bool

skip: str

testCases = [

TestCase(name="1 must_pass", input=1+2, expected=3, raisesException=False, skip=None),

TestCase(name="2 must_fail", input=1+2, expected=4, raisesException=False, skip="Jira: bug-101"),

TestCase(name="3 must_crash", input=1+2, expected=4, raisesException=True, skip="Jira: bug-102"),

]

ids = [testCase.name for testCase in testCases]

params = [testCase for testCase in testCases]

@pytest.mark.parametrize("testData", params, ids=ids)

def test_cases(testData:TestCase):

if(testData.skip):

pytest.skip(testData.skip)

if testData.raisesException:

raise RuntimeError("CRASH! ;-)")

else:

assert testData.input==testData.expected, "this test must fail, and it did :)"

test.py . [100%]

===================== 1 passed, 2 skipped in 0.02s =======================🏋️ Exercise

Try to make a skip in one of your regular tests and one for the test-driven tests (you may only skip one of the sub-tests in the data-driven tests).

Reporting

Sometimes it can be a great idea to make a test report in html.

It can be easier to get an quick overview or navigate through the test cases easier.

For more complex tests it can also create a better overview in a visual day.

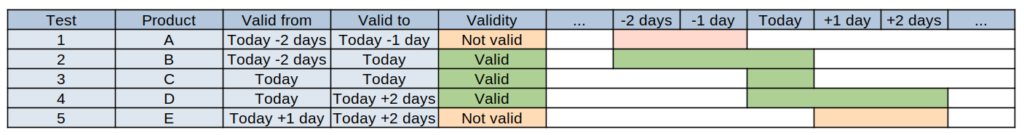

A table like this:

TestCase(test=1, product="A", validFrom=today(days=-2), validTo=today(days=-1), expectedValidity=TRUE)

TestCase(test=2, product="B", validFrom=today(days=-2), validTo=today(), expectedValidity=FALSE)

TestCase(test=3, product="C", validFrom=today(), validTo=today(), expectedValidity=FALSE)

TestCase(test=4, product="D", validFrom=today(), validTo=today(days=2), expectedValidity=TRUE)

TestCase(test=5, product="E", validFrom=today(days=1), validTo=today(days=2), expectedValidity=FALSE)Can be reformed into a graph like this:

Which is much more readable.

Especially when the complexity grows and we add i.e. timezones.

I will not go much into reporting in this chapter, but will write a separate one, which will contain all kind of good ideas, incl. testing of report templates.

Conclusion

Let’s wrap up our journey into Test-Driven Development (TDD), shall we? 🚀

Needed tools

There are many programming languages and even more test-frameworks. To compare them better, it is recommended to test them out.

How to Learn a Test Framework

We have learned that creating small, incremental tests helps us understand the outcomes and build knowledge of the test framework, similar to the principles of Test-Driven Development (TDD).

Test 1: To Make It Pass

We have learned how to set up a basic test that is designed to pass, involving the installation of the framework, writing a simple test, and ensuring it runs successfully.

Test 2: To Make It Fail

We have learned the importance of including a test case meant to fail, as it helps us understand how the test framework handles and reports failures.

Test 3: To Make It Crash

We have learned to create a test that raises an exception to simulate a crash, which helps distinguish between assertion errors and runtime errors in the test results.

Automating Tests

We have learned to automate tests by renaming methods to avoid automatic execution, using wrapper tests to verify behavior, and ensuring non-crashing tests pass successfully.

Containing Failures and Crashes

We have learned to handle expected errors using try-except blocks (or try-catch in other languages) to manage assertion errors and runtime exceptions effectively.

Data-Driven Testing

We have learned to consolidate multiple tests into a single parameterized test using @dataclass to define test cases, which enhances readability and maintainability.

Making Tests Understandable

We have learned the importance of clear and maintainable tests by using descriptive parameter names, grouping inputs and expected results, and avoiding cryptic variable names.

Skipping Tests

We have learned to mark tests that should not run due to known issues with @skip or @Ignore, and to provide reasons or links to bug tracking systems for reference.

Reporting

We have learned the value of HTML reports for better visual representation and navigation of test results, especially useful in more complex scenarios.

Congratulations – Lesson complete!