Introduction

The world changes faster and faster.

New frameworks comes out, old frameworks dies.

Sometimes we just need to change from one to another.

How do we do that?

And how can we keep up?

What is SpecFlow?

SpecFlow is an open-source tool that aids in Behavior-Driven Development (BDD) for .NET applications. It bridges the gap between technical and non-technical stakeholders by allowing developers to write executable specifications in a Gherkin language:

Given linkedin.com is opened in a browser

When a user logs in with incorrect password

Then the following error message is shown: "🤬"A test engineer writes the needed glue code that can translate the Gherkin language into code, so a computer can execute it.

By leveraging Gherkin language, SpecFlow enables clear communication and collaboration among team members, ultimately enhancing the quality and reliability of software projects.

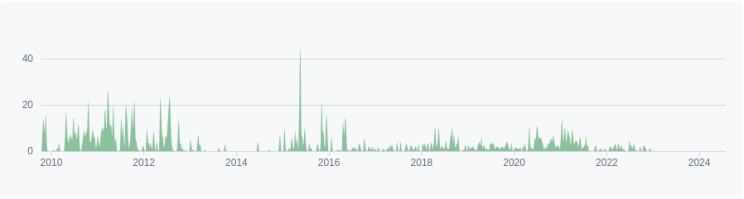

SpecFlow is dead?

Limited Updates: SpecFlow has seen a reduction in active development and fewer updates, as evidenced by the release history on GitHub, with the last stable release in May 2022 and the last beta version in February 2023:

Forked Project: Although SpecFlow is open-source, the name “SpecFlow” is trademarked by Tricentis. Consequently, the SpecFlow project was forked on GitHub and renamed ReqnRoll (which amusingly reminds me of a “Rick Roll“)

This looks dire for SpecFlow.

To Upgrading or not to upgrade?

I asked ChatGPT 4o this question:

SpecFlow seems to be dead.

What would the reasons be to upgrade or not to upgrade to ReqnRoll?

Can you write it as short bullet points?and got the following answer:

Reasons to Upgrade to ReqnRoll

* Active Development: Regular updates and support.

* New Features: Enhanced functionality and tools.

* Active Community: More resources and community support.

* Better Compatibility: Improved integration with modern tools.

* Performance Boost: Faster and more efficient testing.

Reasons Not to Upgrade to ReqnRoll

* Migration Effort: Time-consuming and costly transition.

* Stability: SpecFlow might still meet current needs.

* Integration Issues: Potential disruptions with existing tools.

* Feature Gaps: ReqnRoll may lack some SpecFlow functionalities.

* Learning Curve: Need for retraining and adjustment.I would like to add that:

- Security Concerns: A test framework is not in production, so lack of security updates is not as big of a problem.

- Proven Stability: The current version has been working for 2 years, so it will probably work for some time yet.

- ReqnRoll is a new tool: Not all features have been migrated from SpecFlow to ReqnRoll. This is covered partially by “Feature Gaps” point.

So, an upgrade is not urgent.

An important question is: “Why fix it, when it ain’t broken?”

Answer: Because everything else is upgraded, like .NET and dependencies. When something finally breaks and we have waited too long, an upgrade might not be possible anymore, and a lot may need to be re-coded. This is a very high risk, even though it is not yet urgent.

How to upgrade an existing test setup

The following approach can be used for

- SpecFlow → ReqnRoll

- SpecFlow → something else

- Something third →something forth

I asked ChatGPT 4o again:

any suggestions on how to upgrade an existing test suite from one framework

to another? Please answer in steps and each step must be short.It gave me 16 bullet points:

1. Evaluate New Framework:..., 2. Plan Migration: ...,

3. Set Up Framework: ..., 4. Analyze Tests: ...,

5. Map Features: ..., 6. Prioritize Tests: ...,

7. Create Samples: ..., 8. Automate Migration: ...,

9. Manual Migration: ..., 10. Validate: ...,

11. Refactor: ..., 12. Update Docs: ...,

13. Train Team: ..., 14. Integrate CI/CD: ...,

15. Retire Old Framework: ..., 16.Monitor: ...I asked:

other things to remember?And it gave me another list:

17. Backup Original Test Suite: ..., 18. Version Control: ...,

19. Consistency: ..., 20. Dependencies: ...,

21. Error Handling: ..., 22. Performance: ...,

23. Parallel Execution: ..., 24. Reporting: ...,

25. Stakeholder Communication: ..., 26. Feedback Loop: ...,

27. Continuous Improvement: ..., 28. Documentation: ...,

29. Compliance: ..., 30. Cross-Platform Testing: ...,

31. Resource Allocation: ...I used it as a check list and wrote the next three parts:

- “How to select a new framework”

- “How to upgrade from one framework to another”

- “How to train the test team”

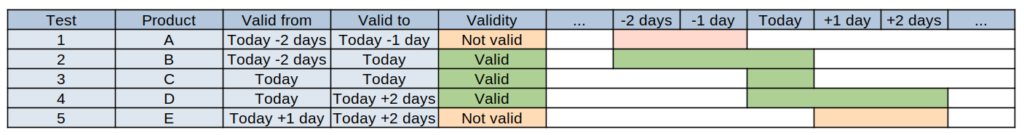

How to select a new framework

The best way to understand a new framework is to actually test it.

Make a test that pass, fails, throws an exception.

Make a data driven test, test with reporting, etc.

I have written an article on how to test a test-framework (it is regarding unit-tests, but it can be used for any other tests). https://bartek.dk/unit-testing-the-unit-testing/

The key point here is, the more we use something, the better we understand it.

So, start with the basics and make a pilot migration on the most critical test cases to experience how they perform.

How to upgrade from one framework to another

When we have an older working test suite, then please keep it and use it.

I have learned from experience that a larger upgrade can end up blocking new tasks. Why? Because all the time is used on making the test suite work again.

When a larger update needs to happen, then create a copy of the test suite (be it a new branch, fork, or a new completely new repository.)

Run both the legacy test suite and the new one.

When something breaks in the legacy test suite, then ask yourself: Can I fix it in max. 60 minutes? If yes, then fix it in the old test suite. If not, then migrate the test case to the new test suite and deprecate it in the legacy test suite.

When the test team has extra time (I know: imaginary thinking 😅), then migrate some test cases to the new test suite (start with the most critical ones).

Evaluate automatic migration vs. manual migration. Sometimes a script can migrate 1000’s of test cases in a short time. Sometimes it is simply cheaper to let people manually copy paste, than to make a script work.

Deprecate the old test suite, when it’s not needed anymore.

How to train the test team

Remember to train the team to use the new test suite.

1. Example test cases

Migrate yourself some of the test cases, so they can be inspired from your code.

2. Unit-test of helper classes as live-documentation

Remember to make unit-tests on your helper classes, to show exampled of how the helper classes are supposed to be used (have to already made unit-tests in the legacy test suite, then it will be easy to recreate the new helper classes in the new test suite).

Proper unit-tests can be used as documentation! And also make it easier to migrate from one framework to another.

3. AI to explain code

You can also use AI to help with the training (which I have written an article about: https://bartek.dk/#ai-learning )

It can translate code from one coding language to another (and from one test framework to another!)

It can help translate a piece of code to Gherkin, especially good for the short cryptic lines of code:

Please translate the following C# Code into Gherkin:

string result = $"{10:D5}";Translated into Gherkin:

Scenario: Formatting the integer 10 with leading zeros to ensure it is 5 digits long

Given an integer value of 10

When the integer is formatted using the pattern "D5" within an interpolated string

Then the result should be a string "00010"How cool is that?

Conclusion

Selecting a framework is done by testing it out

Changing a framework don’t have to be done at once

Training the test team can be done by examples, unit-tests and AI.

Our world is already changing fast.

AI will make it changer even faster.

AI (with AI-learning) will also help us adapt faster.

Music Intro

Congratulations – Lesson complete!